Dr Per Ola Kristensson is one of 35 top young innovators named today by the prestigious MIT Technology Review.

For over a decade, the global media company has recognised a list of exceptionally talented technologists whose work has great potential to “transform the world.”

Dr Kristensson (34) joins a stellar list of technological talent. Previous winners include Larry Page and Sergey Brin, the cofounders of Google; Mark Zuckerberg, the cofounder of Facebook; Jonathan Ive, the chief designer of Apple; and David Karp, the creator of Tumblr.

The award recognises Per Ola’s work at the intersection of artificial intelligence and human-computer interaction. He builds intelligent interactive systems that enable people to be more creative, expressive and satisfied in their daily lives. focusingon text entry interfaces and other interaction techniques.

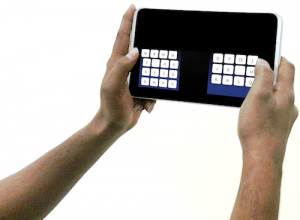

One example is the gesture keyboard, which enables users to quickly and accurately write text on mobile devices by sliding a finger across a touchscreen keyboard. To write “the” the user touches the T key, slides to the H key, then the E key, and then lifts the finger. The result is a shorthand gesture for the word “the” which can be identified as a user’s intended word using a recognition algorithm. Today, gesture keyboards are found in products such as ShapeWriter, Swype and T9 Trace, and pre-installed on Android phones. Per Ola’s own ShapeWriter, Inc. iPhone app, ranked the 8th best app by Time Magazine in 2008, had a million downloads in the first few months.

Two factors explain the success of the gesture keyboard: speed, and ease of adoption. Gesture keyboards are faster than regular touchscreen keyboards because expert users can quickly gesture a word by direct recall from motor memory. The gesture keyboard is easy to adopt because it enables users to smoothly and unconsciously transition from slow visual tracing to this fast recall directly from motor memory. Novice users spell out words by sliding their finger from letter to the letter using visually guided movements. With repetition, the gesture gradually builds up in the user’s motor memory until it can be quickly recalled.

A gesture keyboard works by matching the gesture made on the keyboard to a set of possible words, and then decides which word is intended by looking at both the gesture and the contents of the sentence being entered. Doing this can require checking as many as 60000 possible words: doing this quickly on a mobile phone required developing new techniques for searching, indexing, and caching.

An example of a gesture recognition algorithm is available here as an interactive Java demo: http://pokristensson.com/increc.html

There are many ways to improve gesture keyboard technology. One way to improve recognition accuracy is to use more sophisticated gesture recognition algorithms to compute the likelihood that a user’s gesture matches the shape of a word. Many researchers work on this problem. Another way is to use better language models. These models can be dramatically improved by identifying large bodies of text similar to what users want to write. This is often achieved by mining the web. Another way to improve language models is to use better estimation algorithms. For example, smoothing is the process of assigning some of the probability mass of the language model to word sequences the language model estimation algorithm has not seen. Smoothing tends to improve the language model’s ability to accurately predict words.

An interesting point about gesture keyboards is how they may disrupt other areas of computer input. Recently we have developed a system that enables a user to enter text via speech recognition, a gesture keyboard, or a combination of both. Users can fix speech recognition errors by simply gesturing the intended word. The system will automatically realize there is a speech recognition error, locate it, and replace the erroneous word with the result provided by the gesture keyboard. This is possible by fusing the probabilistic information provided by the speech and the keyboard.

Per Ola also works in the areas of multi-display systems, eye-tracking systems, and crowdsourcing and human computation. He takes on undergraduate and postgraduate project students and PhD students. If you are interested in working with him, you are encouraged to read http://pokristensson.com/phdposition.html

References:

Kristensson, P.O. and Zhai, S. 2004. SHARK2: a large vocabulary shorthand writing system for pen-based computers. In Proceedings of the 17th Annual ACM Symposium on User Interface Software and Technology (UIST 2004). ACM Press: 43-52.

(http://dx.doi.org/10.1145/1029632.1029640)

Kristensson, P.O. and Vertanen, K. 2011. Asynchronous multimodal text entry using speech and gesture keyboards. In Proceedings of the 12th Annual Conference of the International Speech Communication Association (Interspeech 2011). ISCA: 581-584.

(http://www.isca-speech.org/archive/interspeech_2011/i11_0581.html)

Full Press Release