Abstract:

From buying plane tickets to eGovernment, participation in consumer and civic society is predicated on continuous connectivity and copious computation . And yet for many at the edges of society, the elderly, the poor, the disabled, and those in rural areas, poor access to digital technology makes them more marginalised, potentially cut off from modern citizenship. I spent three and half months last summer walking over a thousand miles around the margins of Wales in order to experience more directly some of the issues facing those on the physical edges of a modern nation, who are often also at the social and economic margins. I will talk about some of the theoretical and practical issues raised; how designing software with constrained resources is more challenging but potentially more rewarding than assuming everyone lives with Silicon Valley levels of connectivity.

Bio:

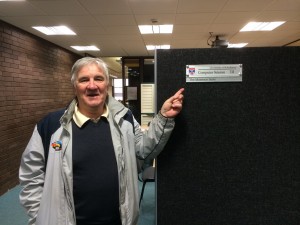

Alan is Professor of Computing at University of Birmingham and Senior Researcher at Talis based in Birmingham, but, when not in Birmingham, or elsewhere lives in Tiree a remote island of the west coast of Scotland.

Alan’s career has included mathematical modelling for agricultural crop sprayers, COBOL programming, submarine design and intelligent lighting. However, he is best known for his work in Human Computer Interaction over three decades including his well known HCI textbook and some of the earliest work in formal methods, mobile interaction, and privacy in HCI. He has worked in posts across the university sector as well as a period as founder director of two dotcom companies, aQtive (1998) and vfridge (2000), which, between them, attracted £850,000 of venture capital funding. He currently works part-time for the University of Birmingham and is on the REF Panel for Computer Science. He also works part-time for Talis, which, inter alia, provides the reading list software used at St Andrews.

His interests and research methods remain, as ever, eclectic, from formal methods, to technical creativity and the modelling of regret. At present he is completing a book, TouchIT, about physicality in design, working with musicologists on next generation digital archives, envisioning how learning analytics can inform and maybe transform university teaching, and working in various projects connected with communication and energy use on Tiree and rural communities.

Last year he completed a walk around Wales as an exploration into technical issues ‘at the edge’, the topic of his seminar.

Event details

- When: 6th May 2014 14:00 - 15:00

- Where: Maths Theatre B

- Format: Seminar